Today we are going to discuss how to plot and calculate the total loss and accuracy at every epoch in the code below. The following code is the Pytorch implementation of how to calculate total loss and accuracy at every epoch.

For those who are interested, a summary of how I went about calculating the loss and accuracy at every epoch and plotting it all can be found here.

As a machine learning enthusiast, I was excited to see that the latest version of Pytorch (PyTorch v0.4) was released recently. The new release comes with a lot of promising new features and performance. In this post, I will show you how to calculate total loss and accuracy at every epoch and plot using matplotlib in PyTorch.

Read more about plot loss matplotlib and let us know what you think.PyTorch is a powerful machine learning library that provides a clean interface for building deep learning models. You can understand neural networks by observing how they work during training. Sometimes you want to compare the learning and validation metrics of your PyTorch model instead of showing the learning process.

In this post, you will learn: How to collect and display metrics while training your deep learning models and how to create graphs from the data collected during training. CIFAR10 training and test datasets loading and normalizing with torchvision :

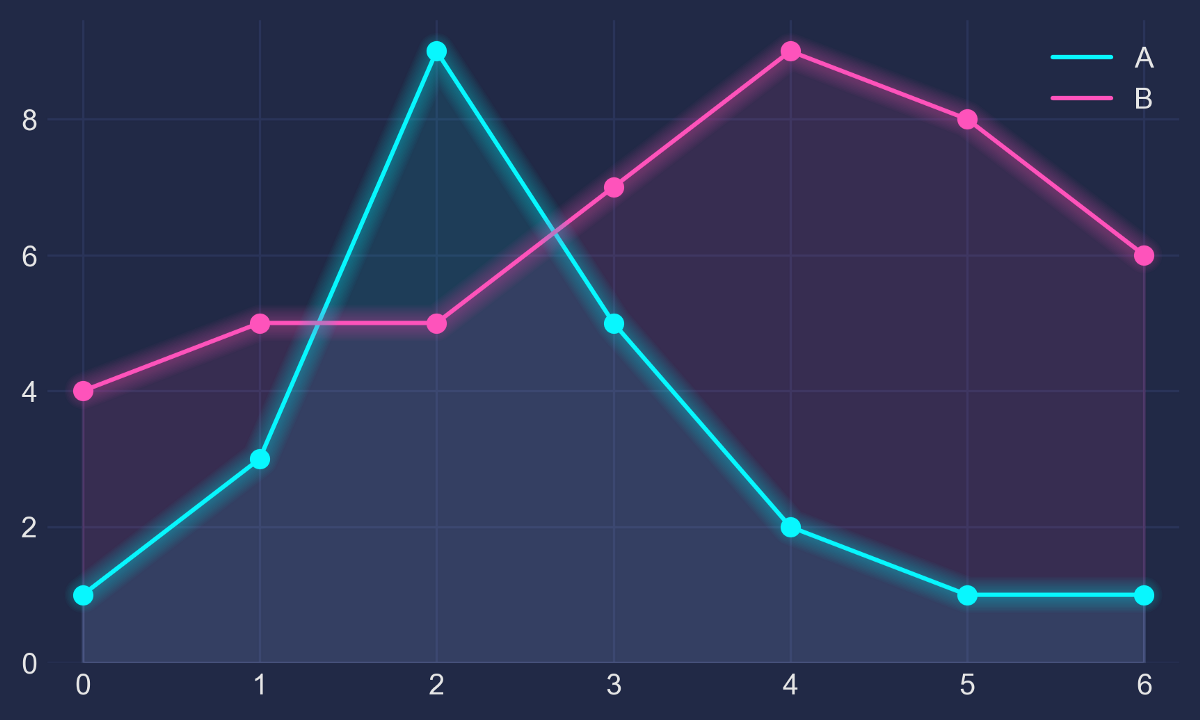

transform=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5)]) train_set=torchvision.datasets.CIFAR10(root=./data,train=True,download=True,transform=transform) test_set=torchvision.datasets.CIFAR10(root=./data,train=False,download=True,transform=transform) trainloader=torch.utils.data.DataLoader(train_set,batch_size=4,shuffle=True,num_workers=2) testloader=torch.utils.data.DataLoader(test_set,batch_size=4,shuffle=False,num_workers=2) Give a definition of a convolutional neural network: model = nn.Sequential( nn.Conv2d(3, 32, kernel_size=3, padding=1), nn.ReLU(), nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.MaxPool2d(2, 2), # Output : 64 x 16 x 16 nn.BatchNorm2d(64), nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.MaxPool2d(2, 2), # Output: 128 x 8 x 8 nn.BatchNorm2d(128), nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1), nn.ReLU(), nn.MaxPool2d(2, 2), # Output: 256 x 4 x 4 nn.BatchNorm2d(256), nn.Flatten(), nn.Linear(256*4*4, 1024), nn.ReLU(), nn.Linear(1024, 512), nn.ReLU(), nn.Linear(512, 10)) Define the loss function: device=torch.device(cuda:0 if torch.cuda.is_available else cpu) model.to(device) loss_fn=nn.CrossEntropyLoss() optimizer=optim.SGD(model.parameters(),lr=0.001,momentum=0.9) The following code collects the losses and accuracy calculated during the training of the model. def train(epoch) : print(‘nEpoch : %d’%epoch) model.train() run_loss=0 correct=0 total=0 for data in tqdm(train loader) : entries,labels=data[0].to(device),data[1].to(device) optimizer.zeroo_grad() outputs=model(inputs) loss=loss_fn(outputs,labels) loss.backward() optimizer.step() current_loss += loss.element() _, predicted = outputs.max(1) total += labels.size(0) true += predicted.eq(labels).sum().item() train_loss=running_loss/len(trainloader) battery=100.*correct/total train_battery.append(battery) train_losses.append(train_loss) print(‘Train loss : %.3f | Accuracy : %.3f’%(train_loss,battery)) It records the learning metrics for each epoch. This includes losses and accuracy in classification tasks. If you want to calculate the losses for each era, divide run_loss by the number of battles and add the result to the train_losses for each era. Accuracy is the number of correct classifications / total number of classifications. I divide that number by the total number of records because I ended an era. Test the network with test data: eval_losses=[] eval_accu=[] def test(epoch): model.eval() run_loss=0 correct=0 total=0 with torch.no_grad() : for data in tqdm(testloader) : images,labels=data[0].to(device),data[1].to(device) outputs=model(images) loss= loss_fn(outputs,labels) running_loss+=loss.item() _, predicted = outputs.max(1) total += labels.size(0) true += predicted.eq(labels).sum().item() test_loss=running_loss/len(testloader) battery=100.*error/total eval_losses.append(test_loss) eval_accumulator.append(battery) print(‘Test loss : %.3f | Accuracy : %.3f’%(test_loss,battery)) Train and test the network with training data. epochs=10 for epoch in range(1,epochs+1) : train(epoch) test(epoch) Accuracy plot on training and validation datasets during training epochs. plt.plot(train_accu, ‘-o’) plt.plot(eval_accu, ‘-o’) plt.xlabel(‘epoch’) plt.ylabel(‘accuracy’) plt.legend([‘Train’, ‘Valid’]) plt.title(‘Train vs Valid Accuracy’) plt.show()

Graph of losses on training and validation datasets during training epochs. plt.plot(train_losses, ‘-o’) plt.plot(eval_losses, ‘-o’) plt.xlabel(‘epoch’) plt.ylabel(‘losses’) plt.legend([‘Train’, ‘Valid’]) plt.title(‘Train vs Valid Losses’) plt.show() This code draws a graph with a loss value for each epoch.

Execute this code in Google Colab.

In many computational jobs, we have to calculate loss and accuracy. This is the simplest loss and accuracy calculation. To calculate loss, we need to find the difference between the actual and target values. To calculate accuracy, we need to find the difference between the actual and the predicted values. You can find the difference more easily as below.. Read more about pytorch training loop with validation and let us know what you think.

Frequently Asked Questions

How does PyTorch calculate accuracy?

The Pytorch loss function is the most widely used loss function in Pytorch. It is used in almost every loss function, including the mean squared error loss, softmax cross entropy, and the negative log softmax cross entropy. However, it has never been explained how it actually works. To get a better understanding of how the loss function works, let’s step through the Pytorch loss function from the top down. If you are relatively new to PyTorch (more than a few months) this is a very helpful tutorial and explanation of how the loss and accuracy are calculated at each epoch and how to plot it.

How is epoch loss calculated in PyTorch?

epoch loss is a commonly used metric that is commonly misunderstood. The purpose of this blog is to understand the meaning of epoch loss better. The loss in epochs is calculated as the (cumulated) loss at every epoch. This is commonly stated as a loss function: epoch loss = loss(t-1) + loss(t) – loss(0) To understand this, let us say we have three models in the network: model1, model2, and model3. The loss function for model1 is: \[loss(t-1) = \sum_{i=1}^n a_{i} x_{i}^{t-1} + b_{i} + \sum_{i=1 Let’s say you want to train your model on a sequence of images and you want to know how many of those images were misclassified. For example, if you trained a model on a sequence of images and it misclassified 5 images in a row, then you would know that it misclassified half of the images it was trained on. How do you calculate the exact number? If you already know the mean loss of the model at each image, you could just subtract the mean loss from the mean total loss at each epoch. But what if you don’t know the mean loss of the model at each image?

How do you plot training Loss and Validation loss in PyTorch?

Scalability and validation loss is one of the most important and often overlooked aspects of deep learning. This post will describe a solution that is easy to implement, and the code is available on Github . PyTorch is a Numpy implementation of TensorFlow, which is a machine learning toolkit for Python. In this post we will see how to plot Loss and Validation Loss in PyTorch.